Why Lighthouse Performance Score Doesn’t Work

Karolina Szczur

December 9, 2021

Illustrated by

For a long time, the Lighthouse Performance Score was the page speed metric to track. Often, sharing a perfect 100 Performance Score was considered a sign of success. But as the page speed evolves, we need to critically evaluate if the metrics we’re using are still relevant and helpful.

At Calibre, we believe Performance Score can do more harm than good. This isn’t necessarily because of its design alone. It’s more complex because of the nuances of web performance monitoring and effectively communicating about speed. Here’s why Performance Score might be failing you.

Table of Contents

- What is Lighthouse Performance Score?

- It’s impossible to describe user experience with one metric

- Performance Score is relative to device type and speed trends

- Performance Score is prone to variability

- Performance Score doesn’t make it easier to talk about speed

- Performance Score can be gamed

- Performance Score doesn’t matter for SEO

- Performance Score has been overtaken by Core Web Vitals

What is Lighthouse Performance Score?

The Performance Score is a 0-100 scale grading that aims to portray the overall picture of page speed. When testing your sites and apps, Lighthouse returns several scores for groups of audits—Performance, Accessibility, Best Practices and SEO. As of Lighthouse 10, the Performance Score is calculated based on the following metrics and weights:

| Metric | Weight |

|---|---|

| Total Blocking Time | 30% |

| Largest Contentful Paint Core Web Vital | 25% |

| Cumulative Layout Shift Core Web Vital | 25% |

| First Contentful Paint | 10% |

| Speed Index | 10% |

In addition to metric weights, Lighthouse determines the Performance Score based on real website HTTP Archive data to create two control points of reference. Based on those points, your reading is categorised as “poor”, “needs improvement”, or “good”, depending on device type (the ranges differ for mobile and desktop devices). You can inspect the scoring in the Lighthouse Performance Score calculator.

It’s impossible to describe user experience with one metric

The Lighthouse Team designed the Performance Score to provide an overall picture of page speed with one grade. With at least 40 different metrics to quantify various aspects of speed, the idea of a single measurement is tempting.

Using this abstraction, we could potentially communicate about speed across the entire organisation without explaining the nuance since we’re talking about one metric on a 0-100 grading scale.

While the Performance Score is well built and can provide value, using it as a definitive signal guaranteeing a good user experience is dangerous. Here’s why we can’t depend on Performance Score alone:

- It’s an accurate measurement for controlled, pre-defined, lab (synthetic) test settings, not overall performance.

- It portrays a measurement based on selected metrics, while other aspects of speed can remain problematic.

- It’s hugely dependent on test infrastructure and location, so it’s easy to inflate the results by testing on fast machines only.

- Its intention to be an all-encompassing score promotes omitting critical details, such as test settings and distributions.

- There’s a gap in knowledge about how the score is calculated and why it fluctuates.

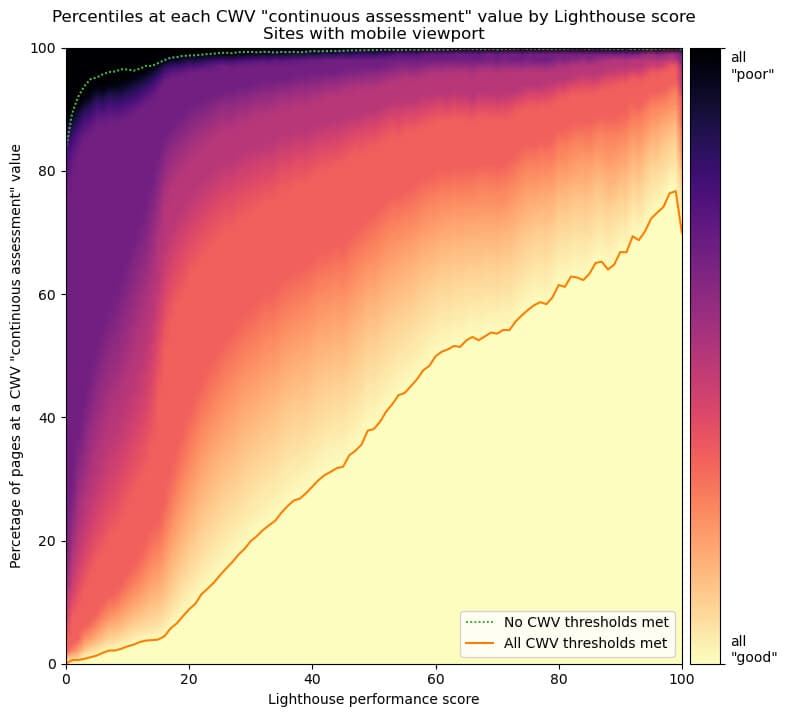

Performance Score is complex and requires context to provide value when talking about page speed. While synthetic measurements help create use cases and prove speed experiments, we can’t forget real user data. Here’s where it gets fascinating. According to research conducted by Brendan Kenny, based on September 2021 HTTP Archive dataset:

Of the pages that got a 90+ in Lighthouse in September, 43% didn’t meet one or more Core Web Vitals threshold.

There is some correlation between the Performance Score and how well your site will do for real users. Still, lab testing alone doesn’t capture all speed issues (similarly, field testing might prove inadequate in showcasing other speed problems). That’s why relying on Performance Score is not a bulletproof strategy. We recommend measuring both in the lab and field with a selection of metrics (including but not exclusive to Core Web Vitals).

Performance Score is relative to device type and speed trends

Every metric is dependent on the context it’s collected in (device type and CPU, network speed, latency, location and so on). Performance Score makes the device type difference even more prominent by using different reference points (based on real user browsing data) to grade mobile and desktop speed relative to what’s considered as “poor”, “needs improvement”, or “good”.

What’s even more critical is that mobile scoring is more favourable than desktop, which means it’s easier to get a higher Performance Score on mobile. This happens because more sites are slower on mobile in comparison to desktop (for now).

Because the score is relative to your metrics and the overall landscape of site speed, it’s worth asking if a higher score reflects the user experience you’re striving for.

Additionally, the Performance Score algorithm isn’t as well understood as we’d assume (especially related to device type and global page speed data). This makes using the score as a portrayal of speed challenging. To use Performance Score as an abstraction of speed, we must make sure we communicate the measurement context. E.g. over the last 30 days, our Performance Score was 80 for mobile devices when tested from North Virginia. Phil Walton has some excellent advice on how to communicate page speed results.

Performance Score is prone to variability

Variability is an essential issue for web performance monitoring. Metrics and scores can fluctuate, even without any changes to your site. This is to be expected but still causes plenty of confusion. We observed more attention paid to Performance Score variability than individual metric fluctuation. Changes to the score are more complicated to investigate (and explain) than changes to any other metric.

When all eyes are on a single measurement, a deviation is not welcome.

Lighthouse documents numerous sources of variability in scoring. Some of the high impact channels of variability are unlikely to happen in lab measurements but likely to be experienced by people browsing your sites. This means not only will your score fluctuate, but it also might not show variability experienced by real users.

Performance Score doesn’t make it easier to talk about speed

The goal of the Performance Score was to simplify understanding speed, but it hasn’t delivered on its promise. Because of variability and relativity to test settings, it creates more confusion than gain (why does my score change? where does the drop come from?).

The knowledge about what the score encompasses isn’t evenly distributed either, leading to assumptions that a higher score equals bulletproof page speed in all aspects. This is made worse by a lack of practice in communication about the context of measurements. Without specifying for which conditions the score is accurate, we’re again making it easy to make sweeping assumptions about user experience.

What was supposed to be simple, in reality, creates accuracy minefields for us to tiptoe around.

Performance Score can be gamed

Like many other tools, Lighthouse can’t cover all testing edge cases, which creates room for potentially altering the score (on purpose or not). While it’s hard to estimate how feasible gamification of Performance Score would be in the real world, some performance experts delved into creating proof of concept demonstrations showing that it’s possible to create a slow site that receives a 100 grading. We’ve also seen reports of tools potentially implementing behaviours that exploit loopholes in metric measurements to obtain a faster reading (such as Largest Contentful Paint).

Automated monitoring is fantastic, but metric algorithms aren’t free of exploitation possibilities (or bugs).

When we treat Performance Score as a single source of speed truth, we expose the case of misunderstanding and misrepresenting real user experience.

Performance Score doesn’t matter for SEO

Many teams believe that Google uses the Lighthouse Performance Score to rank their sites. This is false.

The Performance Score has no direct influence on your search ranking.

Google evaluates pages based on page experience, content, relevance, quality, engagement metrics and other factors. Site speed data for ranking purposes is collected from real Chrome browser user sessions. Core Web Vitals are now the backbone page speed measurements that matter for search engine ranking. While they are included in the Performance Score algorithm, there’s no correlation between Performance Score and ranking. If your team is focusing on SEO, you should be monitoring and optimising for Core Web Vitals instead.

Performance Score has been overtaken by Core Web Vitals

Since the Core Web Vitals announcement, we observed a gradual departure from promoting and recommending the Performance Score. Right now, the vast majority of communications about speed and metrics coming from Google emphasise the importance of Core Web Vitals and other upcoming metrics.

New PageSpeed Insights design showcasing Core Web Vitals as primary page speed assessment tool.

While Performance Score still exists in Lighthouse and a few other free testing tools, it’s clear Core Web Vitals are metrics to watch for. In the past, we’ve seen metrics get quietly deprecated (we can still measure some of them), which doesn’t help us know what to pay attention to and can lead to focusing on the wrong areas. For now, Core Web Vitals are here to stay and offer a more holistic and robust portrayal of page speed.

We encourage teams to revisit monitoring the Performance Score and replacing it with a set of metrics that portray multiple aspects of speed. We recommend tracking synthetically and in the field, to get a complete understanding of user experience and have a reliable platform for page speed experimentation.

Get the latest performance resources, every two weeks

We will send you articles, tools, case studies and more, so you can become a better performance advocate.

“This newsletter is easily the best performance publication we have.”

Harry Roberts

Consultant Web Performance Engineer